Burpsuite engagements tools

Allow for deep and clean discovery, absolutely recommended for crawling on a specific scope.

vhosts enumeration

gobuster vhost -u http://{targetIP or hostname} -w {wordlist} --append-domainffuf -w ~/wordlists/subdomains.txt -H "Host: FUZZ.$ipscope" -u http://$ipscope -fs 1495subdomain enumeration

Differentiate with vhosts

vhosts is a feature for servers. subdomain are DNS records.

gobuster dns -u {hostname} -w {wordlist}The SecLists is a very useful repository available directly on Kali. Can be used for enumeration, fuzzing etc…

directory/file enumeration

ffuf -u http://{target IP or hostname}/FUZZ -w {wordlist}

file extension

Add

-e .{file_extension}to replicate each content in the wordlist with specified file extension.

Good wordlists :

- seclists/Discovery/Web-Content/common.txt

- seclists/Discovery/Web-Content/big.txt

- seclists/Discovery/Web-Content/raft-large-directories.txt

- seclists/Discovery/Web-Content/directory-list-2.3medium.txt

Example of complete command used for file enumeration (settting scope variables before) :

ffuf -w /usr/share/seclists/Discovery/Web-Content/big.txt -u https://$ipscope:$port/FUZZ -ic -e .php,.html,.txt,.bak,.jsDirectory enumeration :

ffuf -w /usr/share/seclists/Discovery/Web-Content/raft-large-directories.txt -u https://$ipscope:$port/FUZZ/ -ic -recursionFuzzing on POST requests :

ffuf -w /usr/share/seclists/Discovery/Web-Content/big.txt -u https://$ipscope/path/ -X POST -H "Content-Type: application/x-www-form-urlencoded" -d "param_you_want_to_fuzz=FUZZ"Use Burp while fuzzing to integrate findings

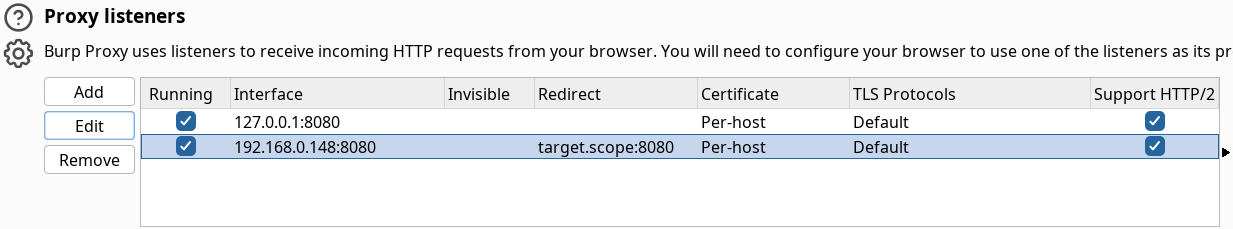

We can add a Proxy listeners that listen on a chosen IP and port and redirect to our target scope, use the listener with our enumeration to put it through Burp history.

SSL/TLS certificates

Check for certificate given from the website. You can also look for every linked certificate associated with cert.sh

Server info

Wappalyzer as an extension in Firefox or using Whatweb :

whatweb --no-errors {target IP or hostname}robots.txt

Just a reminder.

source code

Review page source-code to find left out data. This should be applied to html and javascript.